PyTorch-based LLaMA model architecture

LlamaForCausalLM(

(model): LlamaModel(

(embed_tokens): Embedding(128256, 2048)

(layers): ModuleList(

(0-15): 16 x LlamaDecoderLayer(

(self_attn): LlamaAttention(

(q_proj): Linear4bit(in_features=2048, out_features=2048, bias=False)

(k_proj): Linear4bit(in_features=2048, out_features=512, bias=False)

(v_proj): Linear4bit(in_features=2048, out_features=512, bias=False)

(o_proj): Linear4bit(in_features=2048, out_features=2048, bias=False)

)

(mlp): LlamaMLP(

(gate_proj): Linear4bit(in_features=2048, out_features=8192, bias=False)

(up_proj): Linear4bit(in_features=2048, out_features=8192, bias=False)

(down_proj): Linear4bit(in_features=8192, out_features=2048, bias=False)

(act_fn): SiLUActivation()

)

(input_layernorm): LlamaRMSNorm((2048,), eps=1e-05)

(post_attention_layernorm): LlamaRMSNorm((2048,), eps=1e-05)

)

)

(norm): LlamaRMSNorm((2048,), eps=1e-05)

(rotary_emb): LlamaRotaryEmbedding()

)

(lm_head): Linear(in_features=2048, out_features=128256, bias=False)

)

The overall architecture of the LLaMA model

LlamaForCausalLM

├─ model: LlamaModel

│ ├─ embed_tokens

│ ├─ layers (LlamaDecoderLayer x16)

│ ├─ norm

│ └─ rotary_emb

└─ lm_head- embed_tokens

- Converts token IDs into vector representations (embeddings).

- Size:

(128256, 2048)→ 128,256 tokens, each represented by a 2048-dimensional vector.

- layers (LlamaDecoderLayer x16)

- A stack of Transformer decoder layers.

- Each layer is a

LlamaDecoderLayer.

- norm

- Final layer normalization applied to the entire sequence.

- Ensures stable training and normalized representations.

- rotary_emb

- Rotary positional embeddings.

- Injects positional information into token embeddings for attention mechanisms.

- lm_head

- Maps the final hidden states back to vocabulary scores.

- Used to predict the next token in autoregressive generation.

Waht embed_tokens does

Suppose your vocabulary has only 5 words: ["I", "want", "to", "learn", "English"], Instead of representing them as integers (token IDs)

"I" → 0

"want" → 1

"to" → 2

"learn" → 3

"English" → 4We map each token to a 5-dimensional vector (instead of a huge 2048-dim vector).

Embedding table (5-dim vectors):

"I" → [0.1, 0.2, 0.5, 0.0, 0.1]

"want" → [0.3, 0.1, 0.4, 0.2, 0.0]

"to" → [0.0, 0.1, 0.0, 0.7, 0.2]

"learn" → [0.5, 0.3, 0.1, 0.0, 0.2]

"English" → [0.2, 0.5, 0.0, 0.1, 0.6]Now each word is represented by a vector in a 5-dimensional space, which the model can understand mathematically.

Why it’s useful

- Similar words will have similar vectors.

- For example, “learn” and “study” could have vectors like

[0.5, 0.3, 0.1, 0.0, 0.2]and[0.5, 0.3, 0.1, 0.0, 0.25].

The model can do attention and MLP operations on these vectors to understand relationships, like:

- “I want” → sequence understanding

- “learn English” → purpose / object of learning

Visual intuition in 5-Dimensional

Imagine a 5-dimensional space (you can’t fully draw it, but conceptually):

- Each word is a point in this space

- Self-attention calculates similarity between points

- MLP transforms these points into higher-level features

- Finally, the model predicts the next word using these vector features

Function of embed_tokens:

- Converts textual tokens into vectors that the model can understand and compute with.

- Original tokens or token IDs are discrete integers; the model cannot perform direct operations on them.

- The embedding layer maps each token into a continuous vector space (e.g., 2048-dimensional vectors),

- enabling operations like attention computation, matrix multiplication, and non-linear transformations.

- One-sentence summary: The embedding layer translates words into the model’s numerical language.

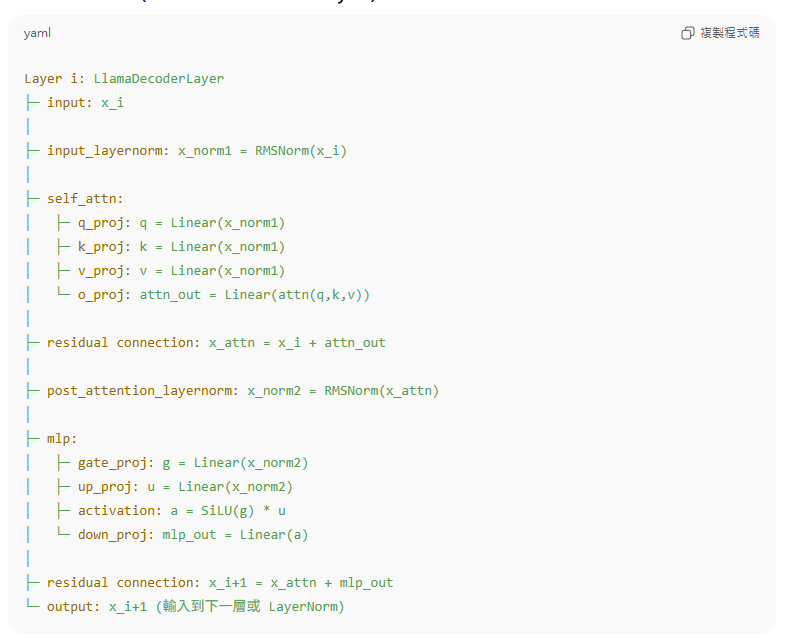

Text → Token IDs → Embedding Vectors → Attention / Transformer OperationsLet’s take a closer look at the two core modules in the Decoder Layer

LlamaAttention (Self-Attention)

Function:

- Allows the model to look at the entire sequence and determine which tokens are most important for the current token.

- In other words, it captures long-range dependencies.

- Example: “I want to learn English” → When processing “English”, the model attends to “learn”, understanding it is the action’s target.

Key Steps:

- Query, Key, Value calculation

- For each token, generate:

- Q (Query): Who am I looking for?

- K (Key): Who am I?

- V (Value): What information can I provide?

- For each token, generate:

- Attention weight calculation

- Each token’s output is a weighted sum of all tokens’ V vectors.

- Output projection

- The result is projected back to the original embedding dimension (e.g., 2048) via o_proj.

Plain Explanation:

The attention layer is basically “looking at the whole sentence, picking the most relevant words, and combining their information.”

(self_attn): LlamaAttention(

(q_proj): Linear4bit(in_features=2048, out_features=2048, bias=False)

(k_proj): Linear4bit(in_features=2048, out_features=512, bias=False)

(v_proj): Linear4bit(in_features=2048, out_features=512, bias=False)

(o_proj): Linear4bit(in_features=2048, out_features=2048, bias=False)

)

q_proj (Query)

Dimension: 2048 → 2048 (keeps the same)

Each token generates a Query vector, which is used to “find relevant tokens” in the sequence.

k_proj (Key)

Dimension: 2048 → 512 (reduces dimensionality)

Each token generates a Key vector, serving as a “identity label” for attention comparison.

v_proj (Value)

Dimension: 2048 → 512 (reduces dimensionality)

Each token generates a Value vector, which contains the information that can be passed to other tokens.

o_proj (Output)

Dimension: 512 → 2048 (or merged from multiple heads back to 2048)

Projects the attention-weighted sum back to the original embedding dimension.#assuming batch_size=1,seq_len=3,hidden_dim=2048

input vector: [batch_size, seq_len, hidden_dim] = [1, 3, 2048]

X = [x1, x2, x3] # shape: [3, 2048]

Step 1: Q/K/V Projection

Q = q_proj(X) # [3, 2048]

K = k_proj(X) # [3, 512]

V = v_proj(X) # [3, 512]

Sep 2: Calculating the attention weights

attention_scores = softmax(Q × K^T / sqrt(d_k)) # d_k=512

Step 3: Weighted Sum

context = attention_scores × V # shape: [3, 512]

Step 4: O projection

output = o_proj(context) # shape: [3, 2048]

LlamaAttention lets each token “look at” all other tokens, pick the most relevant information, and combine it into a new representation.

LlamaMLP (Feed-Forward Layer)

The MLP takes each token’s vector (a 2048-dimensional vector), applies non-linear changes to it, and helps the model understand complex patterns. Attention finds the important info, and the MLP processes and reshapes it.

gate_proj: 2048 → 8192

up_proj: 2048 → 8192

act_fn: SiLU

down_proj: 8192 → 2048Step by Step: LlamaMLP

- Up-projection (gate_proj / up_proj)

- Increases the vector dimension from 2048 → 8192.

- Imagine expanding a simple message into more details, allowing the model to capture more latent features.

- Non-linear activation (SiLU)

- The SiLU function introduces non-linear changes to the vector, not just linear scaling.

- This process allows the model to learn complex patterns, for example:

- A word in a sentence is important only in a specific context.

- Multiple words combine to form special meanings.

- Down-projection (down_proj)

- Reduces the vector dimension from 8192 → 2048.

- Like compressing the processed message back to its original size, keeping important information and discarding redundancy.

MLP as a Mini Processing Factory

| Step / Process | Analogy | Explanation |

|---|---|---|

| Up-projection (expand) | Place the raw material on a large workbench, break it into more parts for easier processing | Expands the 2048-d vector to 8192-d, exposing more latent features |

| Activation function (SiLU) | Pass the parts through machines to reshape them or create new features | Introduces non-linear transformations to learn complex patterns |

| Down-projection (compress) | Reassemble the processed parts into a finished product, keeping the most important pieces | Reduce back to 2048-d, retaining key information while discarding redundancy |

Conclusion:

The attention layer finds the important information, and the MLP layer processes, refines, and mixes this information into feature vectors that the model can understand better, then passes them to the next decoder layer or output.

Both Attention and MLP Are Important

- If there is only Attention: the model can capture context, but cannot effectively process the information.

- If there is only MLP: the model can only process each token individually, unable to capture dependencies across the sequence.

The strength of Transformers comes from the interaction between Attention and MLP:

- Attention identifies relationships between tokens.

- MLP refines and transforms the information.

Stacking multiple layers allows the model to capture long-range dependencies and complex semantics.

LlamaRMSNorm (Input / Post-Attention / Final Norm)

Function:

- Maintain numerical stability

- Ensures that input vectors to each layer have a consistent scale across dimensions, preventing gradient vanishing or explosion.

- Accelerate convergence

- Helps the model train faster and more stably.

- Not standard normalization to mean 0

- RMSNorm scales vectors based on their Root Mean Square (RMS), without subtracting the mean.

- This approach is effective for Transformer performance.

Intuitive Understanding:

- Imagine a 2048-d vector. After Attention or MLP, some dimensions may be very large, others very small.

- RMSNorm acts like adjusting the “volume” of the vector to a reasonable range, so the next layer can process it stably.

Common placements in LLaMA:

| Layer | Function |

|---|---|

| input_layernorm | Normalize vectors before Attention or MLP |

| post_attention_layernorm | Normalize after Attention, stabilizing MLP input |

| final norm (model.norm) | Normalize the final output of the LLaMA model, ensuring stability |

lm_head (Linear Projection to Vocabulary)

The final layer of LLaMA is:

lm_head = Linear(in_features=2048, out_features=128256, bias=False)Purpose:

- It projects the model’s final hidden state into the vocabulary space, producing logits for each token.

- In simple terms, it converts a 2048-dimensional semantic vector into a score for every word in the vocabulary.

Intuitive understanding:

- The hidden vector from the model is like a compressed semantic summary of the context.

lm_headacts like a lookup or projection table that expands this summary into scores for each token.- Tokens with higher scores are more likely to be chosen as the model’s prediction.

- These logits are usually followed by a softmax to convert them into probabilities for generating the next token.

Summary in the model flow:

- Embedding → token → vector

- Decoder Layers → attention + MLP + RMSNorm

- Final RMSNorm (

model.norm) → normalize output vector - lm_head → project vector to vocabulary → logits → softmax → predicted next token

🧠 Official & Authoritative Sources

- Meta AI – LLaMA Official Blog Post

👉 https://ai.meta.com/blog/llama-2/

Official introduction to the LLaMA models from Meta AI. - Hugging Face – LLaMA Model Card

👉 https://huggingface.co/meta-llama

Provides details, architecture, and usage examples of LLaMA models. - ArXiv – Transformer Paper (Vaswani et al., 2017)

👉 https://arxiv.org/abs/1706.03762

The original paper: “Attention Is All You Need.” Foundational to all modern LLMs. - ArXiv – LLaMA: Open and Efficient Foundation Language Models

👉 https://arxiv.org/abs/2302.13971

Official research paper describing LLaMA’s architecture and performance.