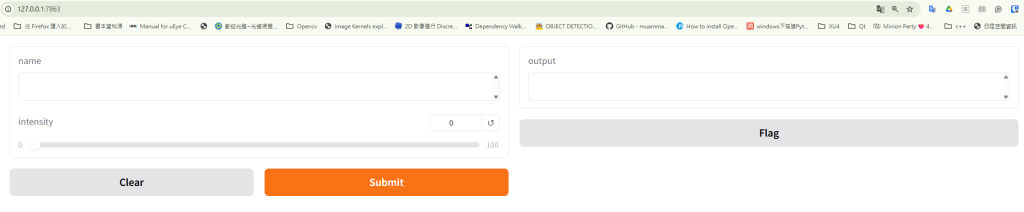

Gradio is an open-source Python framework that makes it easy to build interactive web-based UIs for machine learning (ML) models, data pipelines, or any Python function.

Instead of writing frontend code (like React, HTML, or JavaScript), you can just wrap your Python function with Gradio, and it automatically generates a clean, shareable UI that runs in the browser.

🔑 Key Points About Gradio UI Framework

- Language: Built for Python, but can serve any model (TensorFlow, PyTorch, scikit-learn, Hugging Face, etc.).

- UI Elements: Provides pre-built components like textboxes, sliders, image uploaders, dropdowns, audio/video inputs, chat UIs, etc.

- Zero Frontend Code: You don’t need to write HTML/CSS/JS — Gradio handles the frontend automatically.

- Interactive Demos: Lets users interact with models (e.g., type text for GPT, upload an image for a classifier).

- Shareability: Generates a public link (via Gradio’s hosting or Hugging Face Spaces) so others can test your model instantly.

- Integration: Works well with Jupyter, Colab, or as standalone apps.

⚡ Example

import gradio as gr

def greet(name):

return f"Hello {name}!"

demo = gr.Interface(fn=greet, inputs="text", outputs="text")

demo.launch()

👉 This creates a browser-based UI where users type a name into a text box and get a greeting.

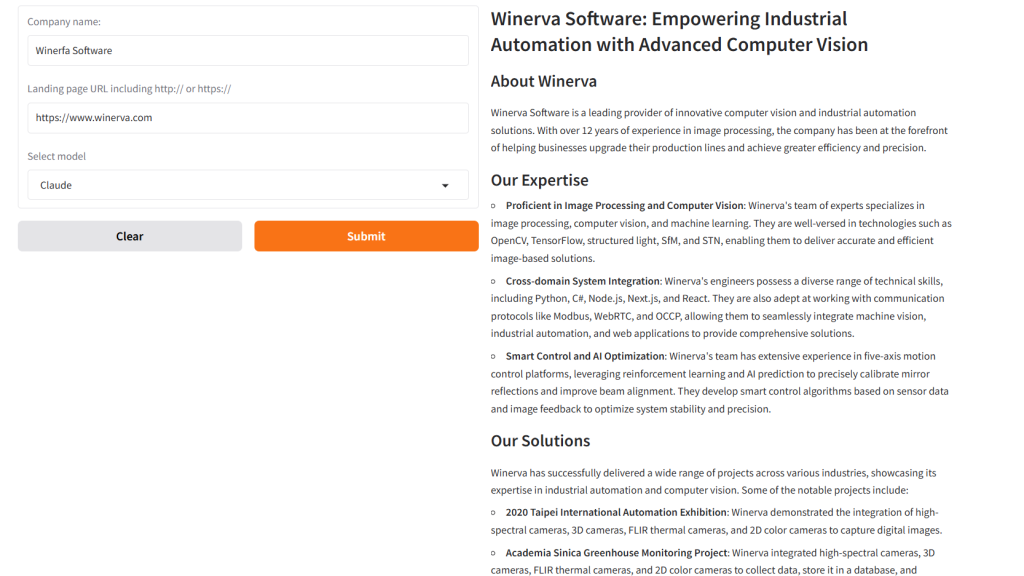

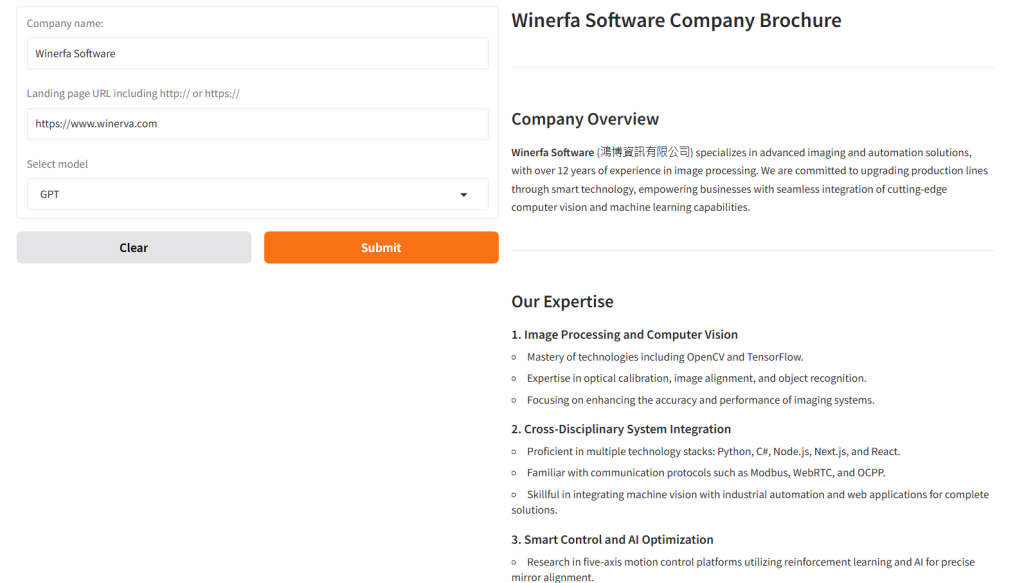

I built a Gradio-based user interface that allows users to input a company name, provide the company’s website URL, and choose between GPT or Claude as the model. The system then analyzes the website’s content and generates a concise company brochure tailored for prospective customers, investors, and recruits.

The system prompt is:

“You are an assistant that analyzes the contents of a company website landing page and creates a short brochure about the company for prospective customers, investors, and recruits. Respond in markdown.”

# imports

import os

import requests

from bs4 import BeautifulSoup

from typing import List

from dotenv import load_dotenv

from openai import OpenAI

import google.generativeai

import anthropic

import gradio as gr # oh yeah!

# Load environment variables in a file called .env

# Print the key prefixes to help with any debugging

load_dotenv(override=True)

openai_api_key = os.getenv('OPENAI_API_KEY')

anthropic_api_key = os.getenv('ANTHROPIC_API_KEY')

google_api_key = os.getenv('GOOGLE_API_KEY')

if openai_api_key:

print(f"OpenAI API Key exists and begins {openai_api_key[:8]}")

else:

print("OpenAI API Key not set")

if anthropic_api_key:

print(f"Anthropic API Key exists and begins {anthropic_api_key[:7]}")

else:

print("Anthropic API Key not set")

if google_api_key:

print(f"Google API Key exists and begins {google_api_key[:8]}")

else:

print("Google API Key not set")

# Connect to OpenAI, Anthropic and Google; comment out the Claude or Google lines if you're not using them

openai = OpenAI()

claude = anthropic.Anthropic()

google.generativeai.configure()

# Let's create a call that streams back results

# If you'd like a refresher on Generators (the "yield" keyword),

# Please take a look at the Intermediate Python notebook in week1 folder.

def stream_gpt(prompt):

messages = [

{"role": "system", "content": system_message},

{"role": "user", "content": prompt}

]

stream = openai.chat.completions.create(

model='gpt-4o-mini',

messages=messages,

stream=True

)

result = ""

for chunk in stream:

result += chunk.choices[0].delta.content or ""

yield result

def stream_claude(prompt):

result = claude.messages.stream(

model="claude-3-haiku-20240307",

max_tokens=1000,

temperature=0.7,

system=system_message,

messages=[

{"role": "user", "content": prompt},

],

)

response = ""

with result as stream:

for text in stream.text_stream:

response += text or ""

yield response

def stream_model(prompt, model):

if model=="GPT":

result = stream_gpt(prompt)

elif model=="Claude":

result = stream_claude(prompt)

else:

raise ValueError("Unknown model")

yield from result# A class to represent a Webpage

class Website:

url: str

title: str

text: str

def __init__(self, url):

self.url = url

response = requests.get(url)

self.body = response.content

soup = BeautifulSoup(self.body, 'html.parser')

self.title = soup.title.string if soup.title else "No title found"

for irrelevant in soup.body(["script", "style", "img", "input"]):

irrelevant.decompose()

self.text = soup.body.get_text(separator="\n", strip=True)

def get_contents(self):

return f"Webpage Title:\n{self.title}\nWebpage Contents:\n{self.text}\n\n"

# With massive thanks to Bill G. who noticed that a prior version of this had a bug! Now fixed.

system_message = "You are an assistant that analyzes the contents of a company website landing page \

and creates a short brochure about the company for prospective customers, investors and recruits. Respond in markdown."

def stream_brochure(company_name, url, model):

prompt = f"Please generate a company brochure for {company_name}. Here is their landing page:\n"

prompt += Website(url).get_contents()

if model=="GPT":

result = stream_gpt(prompt)

elif model=="Claude":

result = stream_claude(prompt)

else:

raise ValueError("Unknown model")

yield from result

view = gr.Interface(

fn=stream_brochure,

inputs=[

gr.Textbox(label="Company name:"),

gr.Textbox(label="Landing page URL including http:// or https://"),

gr.Dropdown(["GPT", "Claude"], label="Select model")],

outputs=[gr.Markdown(label="Brochure:")],

flagging_mode="never"

)

view.launch()